For many organizations, tools like ChatGPT and Microsoft Copilot have become familiar productivity aids in those tasks. But they seem to lack the ability to truly move the business forward ...

Our prospects ask a reasonable question: How can AI truly understand our business?

Stories about hallucinations, incorrect answers, or inconsistent output have made their way into boardrooms and buying conversations.

As AI adoption increases, so does concern about data quality and reliability.

As part of their evaluation, several prospects who became customers asked for a structured data-quality validation track, comparing our outputs directly with theirs. And for good reason. Without strong data foundations, businesses can’t expect optimal AI outcomes.

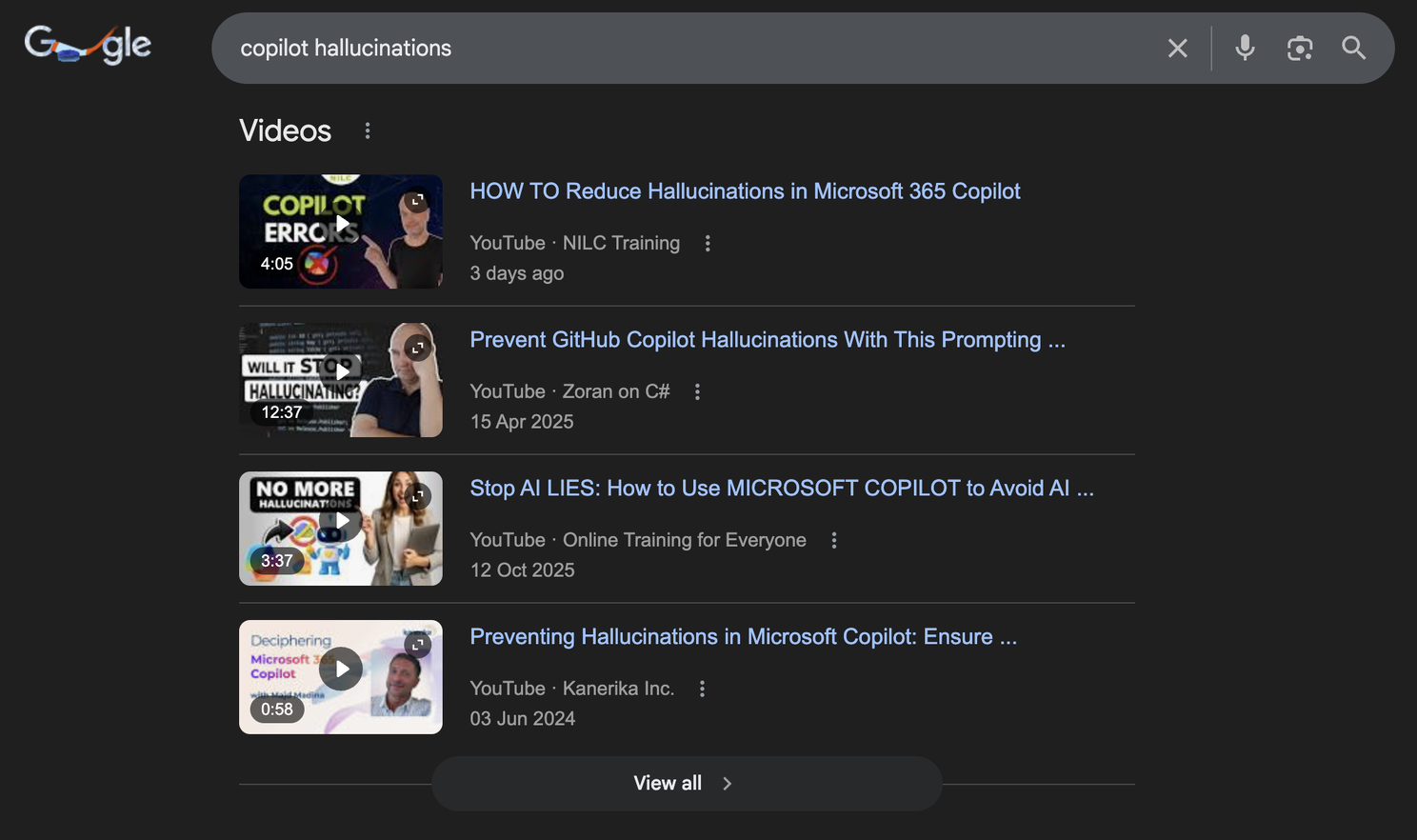

It's not just us saying Copilot (and others) hallucinate answers:

Why the question of AI data quality keeps coming up

The ChatGPTs and Copilots of the world today, are designed to be general-purpose.

They are built to:

- answer a wide range of questions

- adapt to many different users

- work across many domains

This flexibility is what makes them powerful, but it’s also what makes their output unpredictable.

Because: General-AI tools are designed to continue, even when the information is incomplete or ambiguous.

It fills in gaps, infers context that is not there, or generates answers that sound plausible but are not grounded in fact.

This behavior is often described as hallucination. But this hallucination is not necessarily a defect in the technology. It is a consequence of how the technology is designed to work, and how users interact with it.

How to get reliable AI output

To achieve reliable AI answers, you design a system that:

- starts from internal company data

- understands how offerings are structured

- validates information instead of freely generating it

- surfaces what is relevant without relying on prompts

Single ‘general’ AI solutions are not good at this, since they don’t have the focus. Similar to how you shouldn’t, as a Chief Product Officer, run the company’s HR department.

And AI systems should be evaluated based on the job they are designed to do.

- General-purpose AI tools excel at flexibility and exploration.

- Purpose-built systems excel at consistency and execution.

When the goal is to understand an entire business and support sales teams at scale, purpose-built systems operate under very different design principles.

Different tools, different uses

The way an AI system is built strongly influences what it is good at — and what it is not.

Understanding this distinction helps explain why tools like ChatGPT or Microsoft Copilot and purpose-built systems like uman can coexist in the same organization, while serving very different roles.

In fact, our customer Plat4mation uses both Copilot and uman, and explains what the different uses are:

”Copilot can imagine a lot of things that are not relevant, but uman is quite focused. It understands exactly how our offering catalog is built up and which offerings we can propose in which condition to which customer.”

- Stef Knaepkens, Chief AI Officer at Plat4mation

Read this blog on the combination of uman and Copilot.

General-purpose AI: supporting individual productivity

General-purpose AI tools are designed to be flexible and broadly applicable.

Just like Stef from Plat4mation says in the video, they are commonly used to draft and rewrite text, perform searches and explore ideas.

In this context, the AI acts as an assistant to the individual user.

The quality of the output depends largely on:

- the question that is asked

- the context that is provided

- the experience of the user

For personal productivity and exploratory work, this model works well. It allows individuals to move faster in day-to-day tasks. Similar to how everyone uses it on their personal accounts at home.

However, this flexibility also means that:

- different users may receive different answers

- results may vary across similar situations

- consistency is difficult to guarantee

These tools are optimized for helping people think and work faster, not for enforcing shared structure or consistent execution.

Purpose-built AI: supporting organizational execution

Purpose-built AI systems (like uman) are designed with a narrower scope, depending on your definition.

Rather than supporting any task, we are built to perform a specific job within an organization with the speed and efficiency no human possibly can.

In the context of sales execution, this means:

- working from internal company data

- understanding how offerings, services, and constraints are structured

- surfacing relevant information automatically

- producing output that is consistent across users and situations

In this model, the AI is embedded in the way the organization works. This reduces the chance of mistakes and hallucinations to practically zero, as long as the input content is correct.

We’ll let our customer Plat4mation speak again:

“To scale our organization we need uman much more to structure our portfolio, to get feedback from what’s working and what’s not working. This is something that we can only get from a solution like uman.”

Input: where reliability actually starts

Reliable AI output always starts with reliable input. This may sound obvious, but it is so often overlooked.

Many AI tools are designed to operate without a fixed source of truth. They rely on a combination of general knowledge, inferred context, and user-provided input to generate responses.

This approach works well when the goal is exploration or personal productivity. But it completely breaks down when the goal is to reflect how a specific business actually operates.

Here's our customer Flexso in an interview with Trends (datanews).

Part of the quote in English:

"You can't just release AI on an unstructured database."

Why using good internal data is so important

Internal company data is not neutral.

It reflects:

- how offerings are structured

- which services can be combined

- which constraints apply

- what has worked in the past

But this information is often:

- fragmented across systems

- undocumented or outdated

- inconsistently interpreted across teams

Naturally, uman fixes both.

General-purpose AI systems of course don’t have access to this reality by default. And even if they do on paper (like a Copilot), they are designed too broad to fully take advantage of it all. And user input is, and will always be, a bottleneck to ensure perfect output.

When general-AIs are asked to reason about a business without being grounded in its internal data, they must infer. And inference is where all reliability is lost.

The risk of external reasoning

When AI systems are not anchored in internal data, two things tend to happen:

- The output becomes generic. Recommendations sound reasonable, but lack relevance

- The output becomes inconsistent. Different users receive different answers to similar questions.

Neither of these outcomes is acceptable in business and sales execution, where decisions need to be repeatable and aligned across the organization.

Purpose-built systems don’t start from what could be true, but from what is true inside the organization.

By grounding AI in internal data from the start, the system no longer needs to guess. It can validate, select, and structure information instead of generating freely.

This allows organizations to fully leverage the power of AI tools and to scale.

The key to success: validation over generation

Why pure generation is dangerous

General-purpose AI tools are designed to generate answers. But not necessarily the correct answer.

They predict what a helpful response might look like based on patterns, probabilities, and the context provided by the user. This is effective for many tasks, but it means the system is optimized to continue, even when information is incomplete or ambiguous.

In business, this behavior is risky.

When decisions are made based on generated assumptions rather than validated facts, small inaccuracies can quickly propagate across deals, teams, and regions.

Remember that quote from Plat4mation above?

”Copilot can imagine a lot of things that are not relevant, ..."

Why validation creates reliable AI behavior

Once AI systems are grounded in internal data, the next question is how that data is used.

Rather than generating new information, they focus on selecting, checking, and structuring information that already exists within the organization.

This means:

- information is matched against known data

- recommendations are constrained by real offerings and conditions

- output is limited to what can be supported by internal sources

When required information is missing, the system does not fill in the gap.

It signals that the information is incomplete.

This may feel restrictive compared to general-purpose AI, but in practice, it's what makes the output trustworthy.

From “best possible answer” to “correct answer”

General-purpose AI tools are designed to provide the best possible answer to a given question.

Purpose-built AI systems are designed to provide the correct answer within a defined context.

By prioritizing validation over generation, AI systems stop reasoning in abstract terms and start operating within the reality of the business.

This all to make sure that:

- the same opportunity leads to the same recommendation

- different sellers tell the same story

- output reflects what can actually be delivered

Validation instead of generation, makes this possible.

Now the AI is still generating a text outcome, it's just not generating outside of it's own knowledge base.

By shifting AI from a generative role to a validating role, organizations reduce risk while increasing confidence in how AI is used across the sales process.

What you should remember

Purpose-built AI systems are designed around a single objective.

Instead of asking what could be a useful answer, the system asks what can be confirmed within this business context.

It technically limits the space in which the AI can operate, but in return it produces output that can be trusted across users, teams, and situations. So in fact, this limit becomes what makes it valuable.

Business and sales execution does not reward creativity. It rewards consistency.

The same opportunity should lead to the same recommendation. The same offering should be positioned the same way. The same constraints should apply, regardless of who is asking.

By validating information against internal data instead of generating assumptions, purpose-built systems ensure that AI output reflects what the organization can actually deliver.

This is why validation-driven systems behave differently and are what’s needed in a business to properly scale beyond personal productivity.

Want to learn more about purpose-built AI in your (sales) organization?